Physical Adversarial Examples

DARTS: Deceiving autonomous cars with toxic signs

Overview

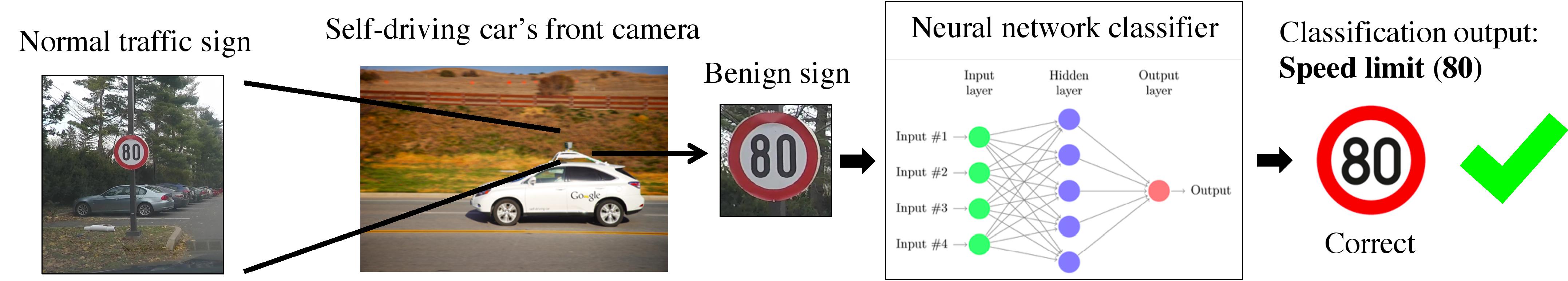

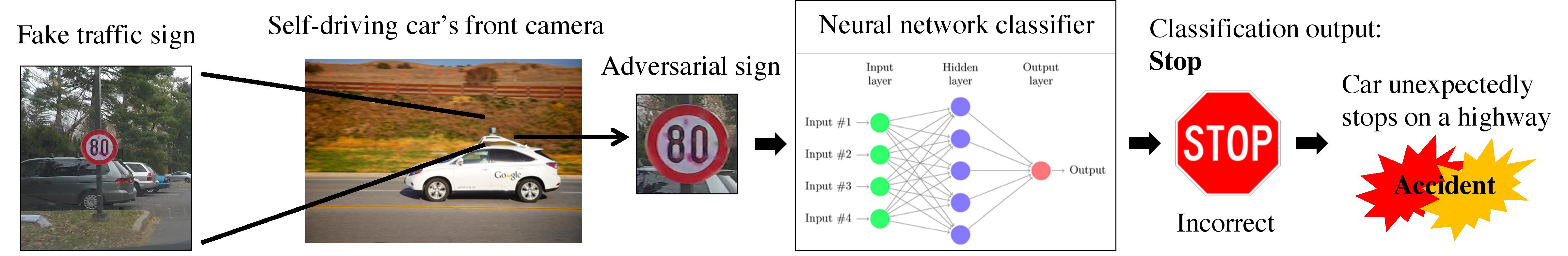

Autonomous cars are one of the most important new applications where machine learning is used. Computer vision systems that rely on machine learning are a crucial component of autonomous cars. However, these systems are not robust against adversaries who can input images with carefully crafted perturbations designed to cause misclassification. In our paper, we demonstrate that these images pose a threat to traffic sign recognition systems which interact with the physical world.

We move beyond attacks that are carried out starting from digital images by printing adversarial examples out on posters and driving by these. We show that adversarial examples can be created starting from arbitrary signs and logos, as well as from traffic signs. Videos of our drive-by tests are below.

Adversarial Traffic Sign Drive-by Test

Custom Sign Drive-by Test

Authors

Chawin Sitawarin (UC Berkeley); Arjun Nitin Bhagoji (Princeton University); Arsalan Mosenia (Princeton University); Mung Chiang (Purdue University); Prateek Mittal (Princeton University)